Where are we with robotics foundation models?

Ape-like intuition and the limitations of thinking in words

It's time to make the point on where we are with foundation models for robotics.

I briefly wrote about foundation model for robotics in Robots That Work.

The argument there was that non-deterministic approaches like VLM/VLAs are currently hard to deploy on the field because they lack the interpretability that allows tracking of workflow completion and a deterministic feedback loop that some operator will intervene to fix.

In substance, they violate the concept that, in project-industry users (construction, trades, maintenance etc.) robotics needs to feel like new tooling, rather than an obscure new machine that we need to worry about the uptime of.

Nevertheless, the prospect of creating embodied intelligence that allows for (1) generalization of robot behavior, (2) quick deployment to a new task and (3) robustness across environment and scenes, is exciting and worth following closely to stay up to date with what is happening in robotics right now.

2025 was the year of VLAs.

An extremely quick recap on how they work starting from their primitives would look like:

- LLMs -> text-in, text-out. They know what things are (eg. they encode) when things are expressed with language

- VLMs -> vision/language-in, vision/language-out. They encode visual input (images and video) into a latent space where pictures and words dance together. The model "sees" a messy construction site: instead of "a wrench", "a 12-inch adjustable wrench lying under a layer of drywall dust with a black tape in the corner of the frame"

- VLAs they take this a step further -> vision/language-in, actions-out. Instead of a programmer writing thousands of lines of "if-then" logic for every joint movement, a VLA generates "action tokens." You give it a high-level command like "Hand me the impact driver" and the model translates the pixels it sees directly into the motor torques required to reach out and grab it.

However, notice, I did not say that "the model understands what an impact driver is". There is almost no physics understanding in what just happened but rather the model recognizes a blob of pixel and some words and acts in a certain way that maps to the words you fed it.

Jim Fan resorts to the Ape example to make the case that dexterous physical intelligence does not necessarily come from language: Apes are no better at language than GPT-1, yet they have a solid mental framework of "what happens if I tip this thing over".

Enter World Models -> the "internal simulator" that gives a robot a sense of physical common sense. Fan argues that to reach true autonomy, a robot shouldn't just map pixels to motor torques; it needs to predict the future.

Ok sure, but how? To understand how people are thinking about this problem let's try to make some clarity on the terms. "World Models" too broad of a definition and I certainly do not want to get anything wrong about different approaches that are being taken to research them.

I'm not a PhD researcher but I still have some of my steel shins from my engineering times so I'll dare to try to divide up the world of "World Models" in two broad categories:

- Generative models or Simulators (like Nvidia Cosmos) vs.

- Non-generative models (like Meta’s JEPA)

World Simulators treat world modeling as a video generation problem. If you ask the model, "What happens if I hit this nail with a hammer?", it attempts to render a high-fidelity video of the event. These models are trending among researchers to create synthetic training data. They allow developers to generate millions of "scenarios" (like a pipe bursting or a crane collapsing) to train smaller, reactive models without risking real equipment.

NVIDIA has a ton of material about this - I spent hours down the rabbit hole of their content and I'll be publishing another article on their "Three Computer Strategy".

A tiny spoiler: computationally intensive approaches = more compute to sell for them.

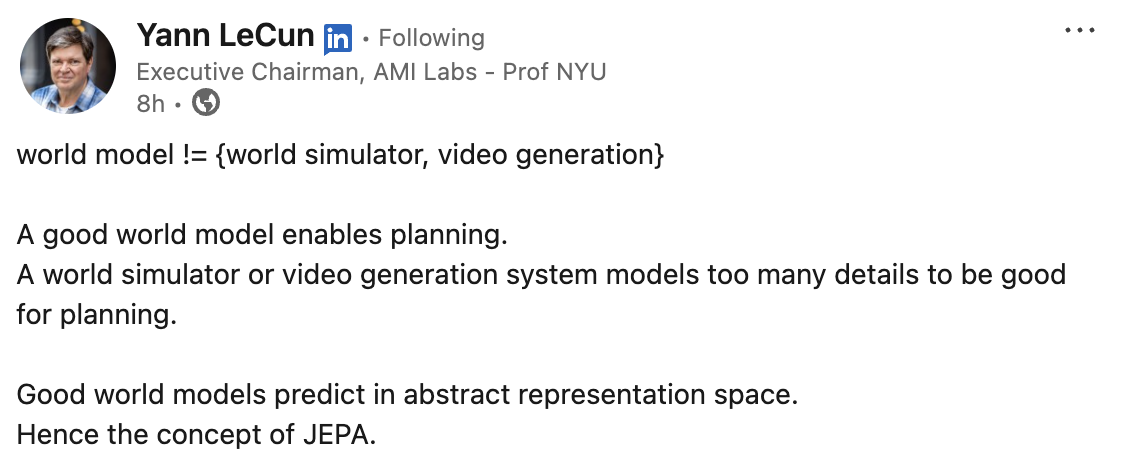

However, Yan LeCun thinks this is the wrong way to go about it:

JEPA stands for Joint-Embedding Predictive Architecure. This class of models cannot generate video natively. They instead predict in an abstract mathematical space (latent space) - which is hard as hell to visualize.

Think of it like this: if a character moves behind a tree, JEPA doesn't care about the texture of the bark or the color of the sky. It only cares about the mathematical representation that "Object A is now hidden by Object B."

Because it doesn't waste time "painting" the world, it can simulate thousands of possible futures in milliseconds to find the best path.

[Rabbit hole zone]

I spent hours with a patient friend who is an ML engineer to answer the question: “ok but who tells JEPA that the relative position of objects A and B is more important than the state of the bark?

In short, the answer is that JEPA learns what’s important through its training objective: predicting future/masked representations in latent space rewards capturing predictable patterns (object motion, occlusion) while punishing wasted capacity on unpredictable noise (random bark textures). The model discovers that relationships and physics are compressible and predictive, while pixel-level details are neither, so it naturally prioritizes the former to minimize prediction loss. If you're willing to spend 10h on this, Rohit Bandaru has a great deep dive on the foundational architecture.

You can probably see how there is a philosophical fork in the road between what Nvidia and Meta are trying to do here.

NVIDIA says:

- "We think Cosmos will win because it is essentially a "hallucination engine" for physics. It’s a high-fidelity simulator that learns to render the next frame of reality (and we'll do this with our high margin GPUs!)"

Yan LeCun (who left Meta now and is doing his own thing) says:

- "We will win because instead of predicting what the future looks like, JEPA predicts what the future means. If you’re digging a trench with an excavator, you don’t need your internal model to render the exact shape of every piece of dirt; you just need to know the resistance of the soil and the stability of your trench."

What I find cool about VL-JEPA (the Vision-Language version, read more here) is that it bridges the gap by encoding these physical "meanings" alongside linguistic ones. It doesn't use words to think; it uses words to label the concepts it already physically understands.

For robotics to become tooling, interpretability is key. JEPAs may be a massive win for interpretability and reliability if they are well trained to stays focused on the causality that matters.

Companies I am working with are waiting for interpretability to become native to foundation models to enable hard manipulation tasks on their robotic platforms. They all operate in high variance environment and, conceptually, foundation models are a perfect fit for deployments where everything constantly changes around the robot (think a construction site). That's generalized behavior, right?

Interpretability is what enables iterating (architect -> deploy-> test -> debug) on new features and - for foundation models - it is not there yet. Until then, it's hard for companies building mission critical machines to ship a reliable feature using these approaches to a customer in construction, inspection or maintenance. You can never be the reason why uptime of a crew went down - the most valuable currency is track record.

A due disclaimer is that I have almost no way to evaluate what approach is best. I struggle trying to decipher what evaluations of models mean and I don't consider them great indicators of who's doing the best work. I'll be on the lookout to see what builders choose to build with and how the outcomes compare.

One thing is clear: it seems like we're finally moving past the era where we try to teach robots language first. We are teaching them the "ape-like" intuition of how objects move and break. Once they have that, the language layer becomes just a helpful interface, rather than the shaky foundation of the entire system. Even without a PhD, this makes total sense to me.

If you want to help me understand more, or explain me why I'm completely off ping me at gabriele@foundamental.com.